Introduction

In this blog post, I will explain the reasons for choosing async communication in the LuFeed architecture, along with the process and rationale behind this decision.

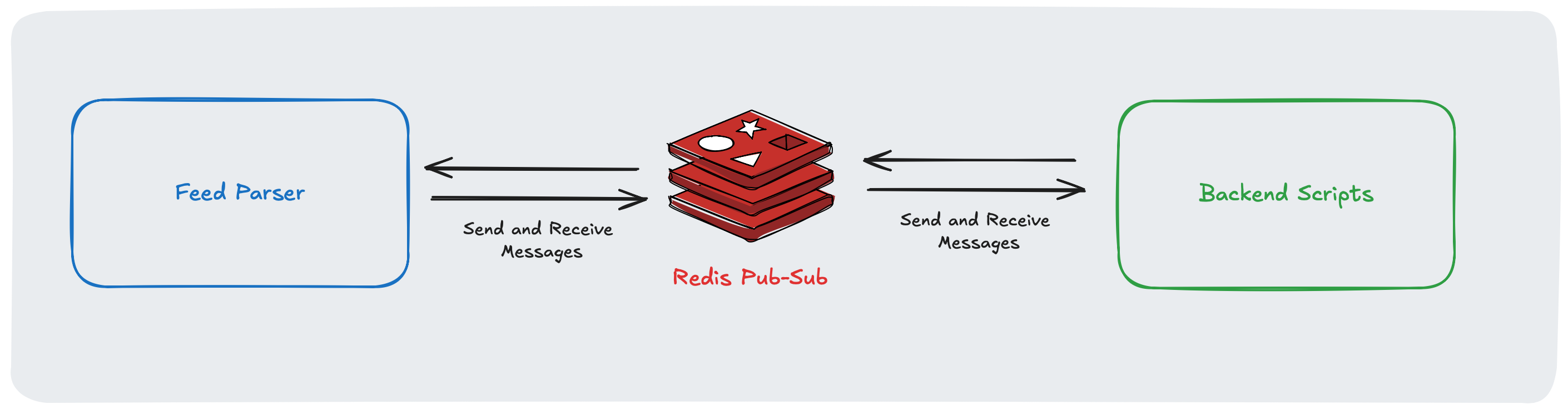

Understanding Components

- Feed Parser: This service handles RSS and feed parsing processes. It takes URLs and returns feed information and items with rich context enhanced with OpenGraph metadata.

- Backend Scripts: These scripts handle background processes and database operations.

Previous Design and Issues

Previously, the feed parser was deployed as a REST API, and communication with scripts was handled through synchronous API calls. This approach was problematic for the following reasons:

- Parsing was slow because the API only responded after parsing all items in the feed.

- Sometimes parsing feed items takes a long time, which causes network errors.

- When backend scripts receive the API response, it contains much more data, and saving it increases the load on Firestore.

The New Approach

I was monitoring the logs and noticed issues occurring at an increasing rate. I decided it was time to address this problem. Since both scripts were running on a private VPS instance, I needed a simple yet scalable and performant solution. After evaluating several alternatives, I decided to implement async communication.

The idea was simple: Instead of waiting for all items, the feed parser can publish messages after parsing each item.

I first considered using a hosted solution like AWS SQS. The SQS free tier was more than sufficient for my needs. However, since I host my application outside of AWS, it would add network delay to each message.

I then started looking for a self-hosted solution. The first option that came to mind was RabbitMQ. I was close to choosing RabbitMQ, but then I remembered something: in both components, I was already using Redis to cache data and reduce loads on external feed URLs and Firestore. Since all components already existed, I thought, why not use Redis Pub/Sub?

Redis Pub/Sub offered everything I needed for my application; a simple solution with minimal latency. Backend scripts publish feed info and item parsing requests. The feed parser receives these messages, and after parsing the feed and each item, it publishes the data back to the channel.

Conclusion

With this approach, I significantly reduced processing time, increased performance, and improved user experience with lower response times.